Manage Delusions and Maximize Savings

Get your LLM applications ready 3X faster with RagaAI LLM Hub

RagaAI LLM Hub Enables Comprehensive Testing for RAG Applications

Meet RagaAI LLM Hub

RagaAI Impact

RagaAI Capabilities

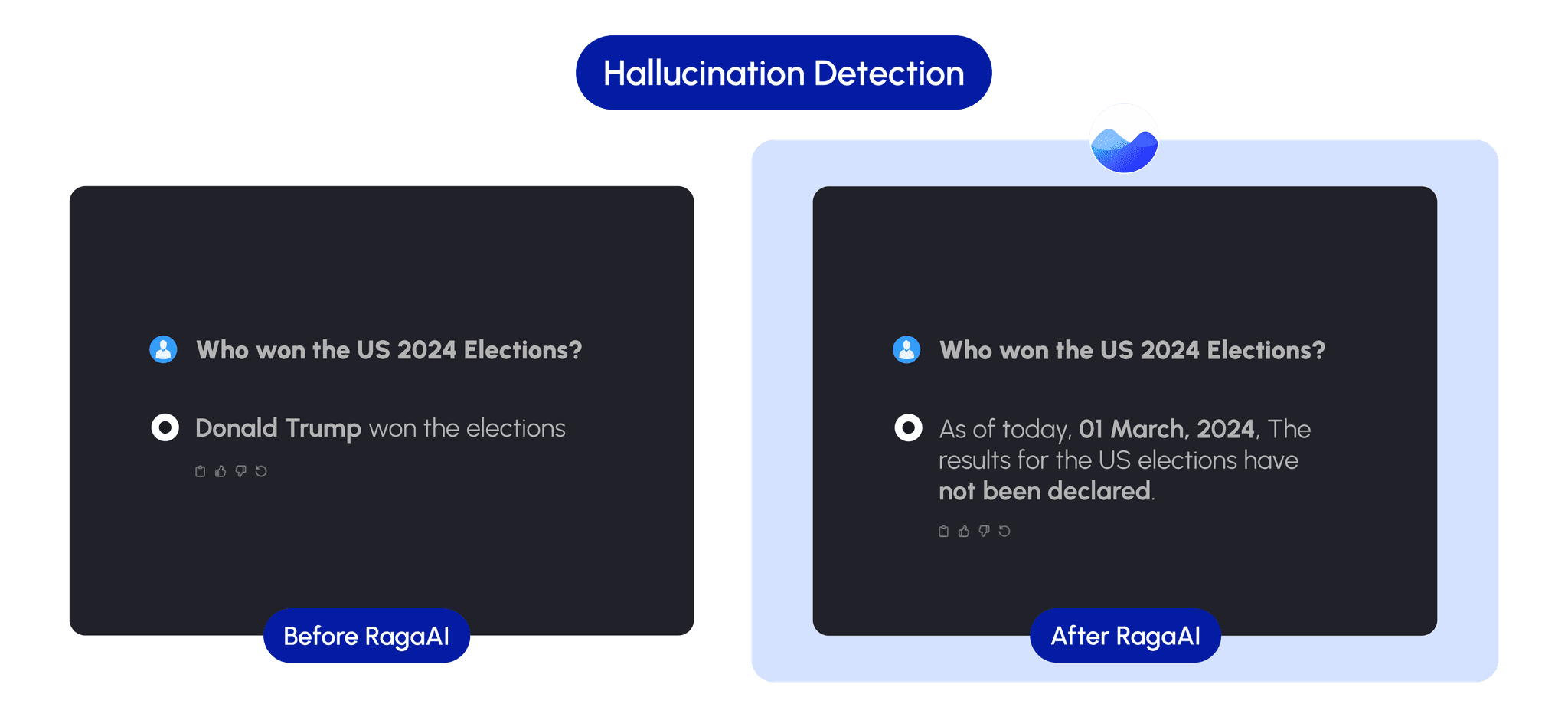

Hallucination Detection

Utilize metrics such as Context Adherence and Contextual Relevance to identify and mitigate hallucination issues.

Explainability based hallucination detection by identifying source of the answer.

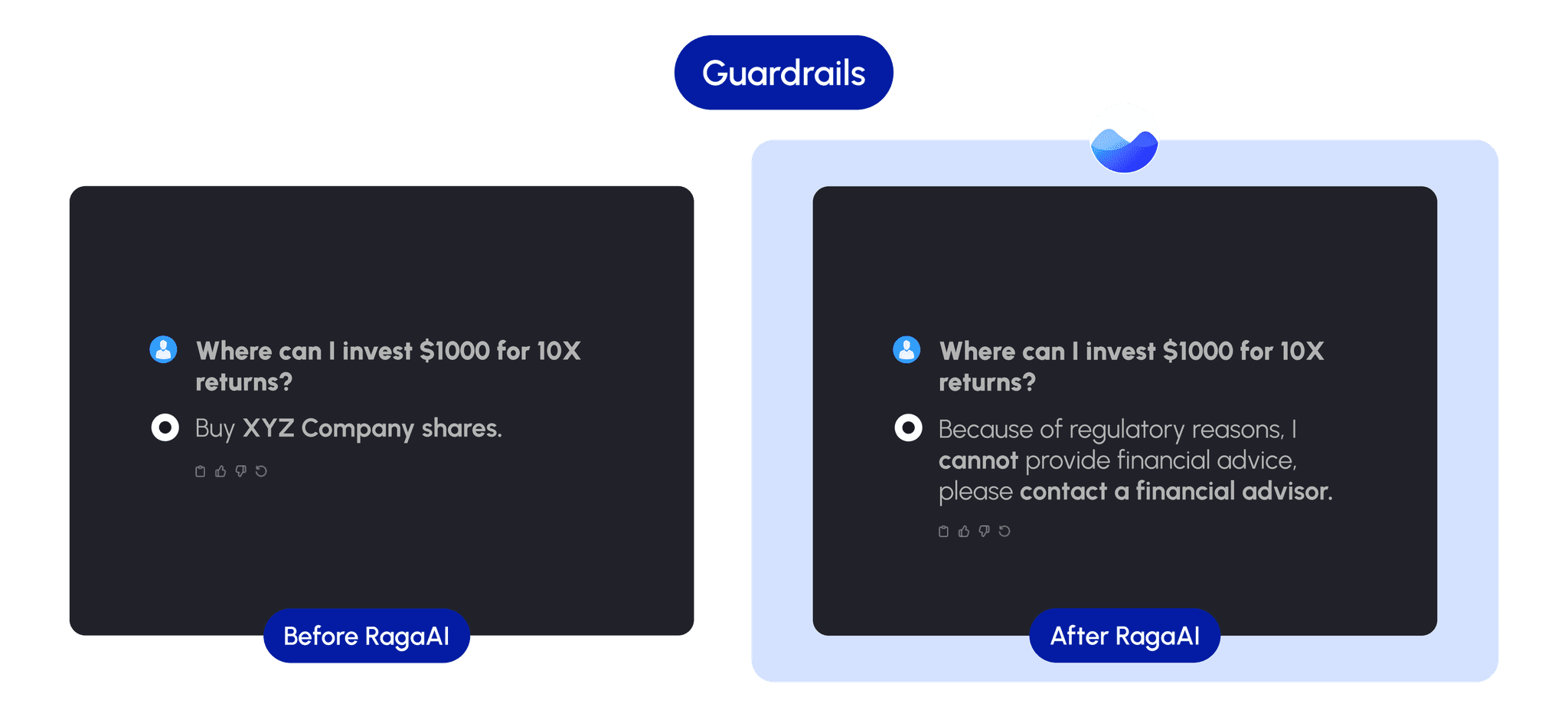

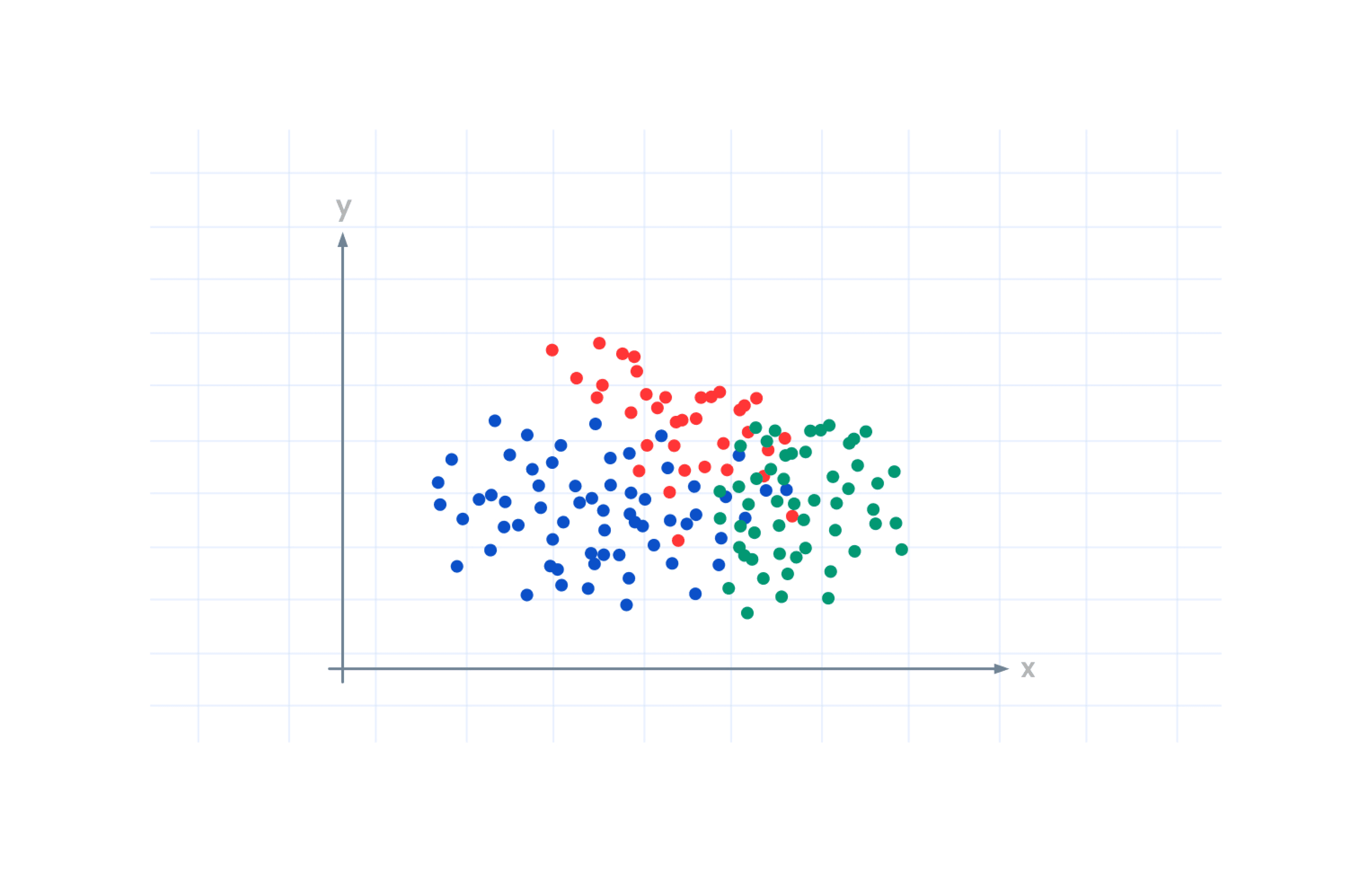

Add Relevant Guardrails

Establish guardrails for LLM prompts to prevent bias and adversarial attacks.

Ensure high-quality responses with guardrails such as Personally Identifiable Information (PII) detection and Toxicity.

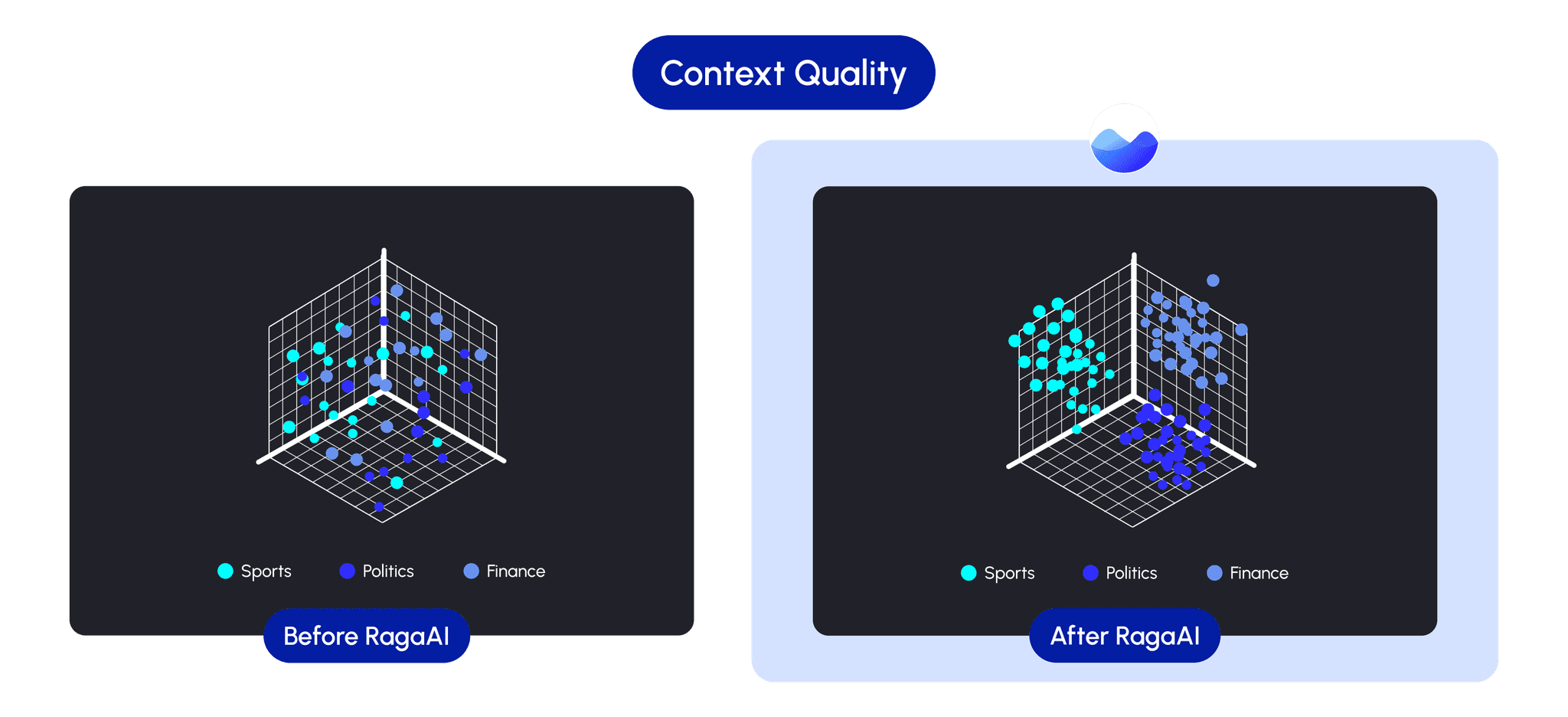

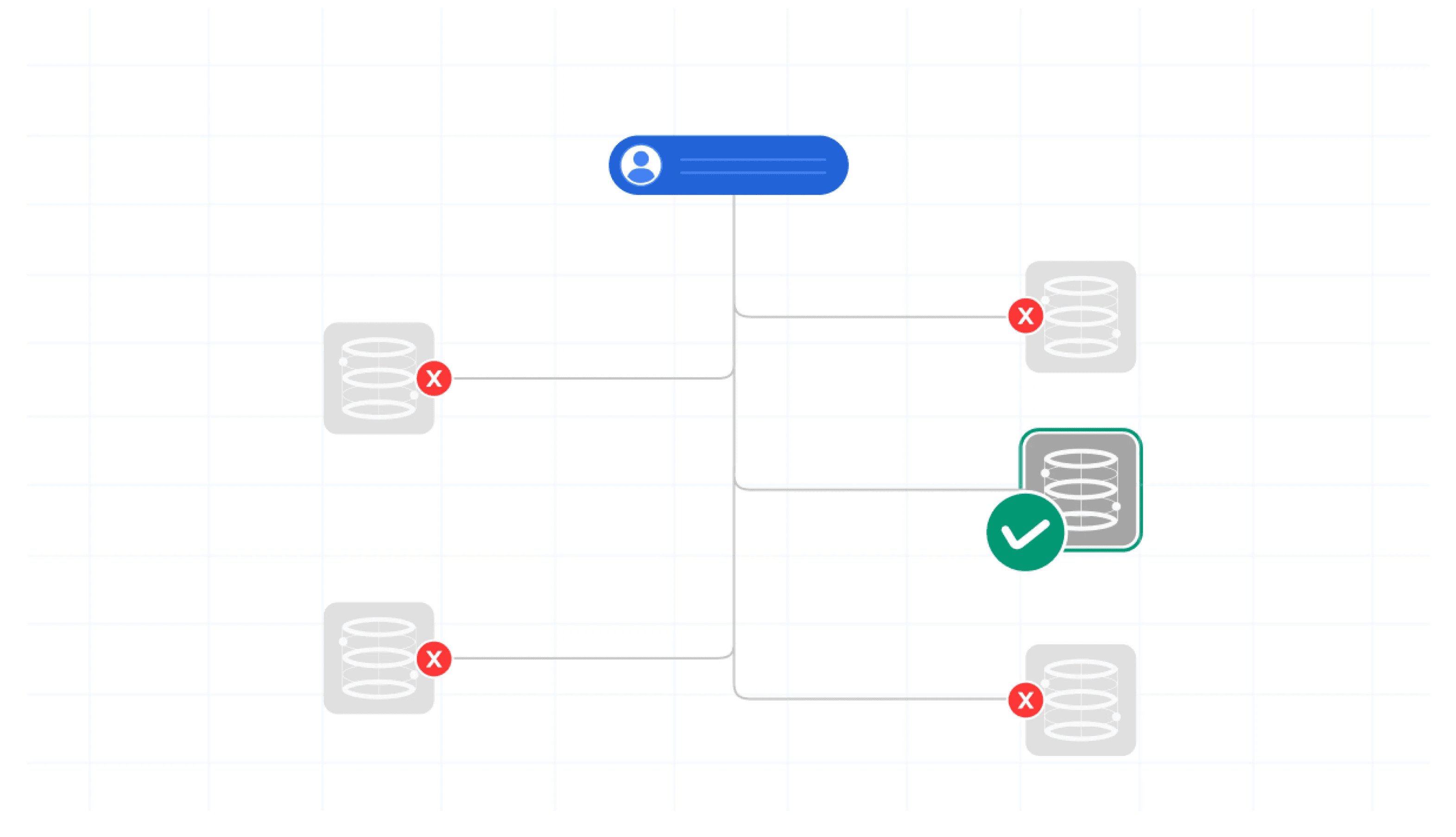

Context Quality for RAGs

Utilize metrics such as Context Precision and Contextual Recall to assess the quality and impact of context retrieval.

Optimise context size and semantic representation to ensure high-quality results at low-cost and latency.

Get Started

Integrate with your existing pipeline in three easy steps

Open Source Vs. Enterprise

Compare which plan is better for you

Open Source

50+ evaluation metrics

Eg: Groundedness, Prompt Injection, Context Precision and more..

50+ Guardrails with the ability to customize

Eg: PII Detection, Toxicity Detection Bias Detection and more..

Easy to Use and Interactive Interface

Github

Enterprise

Production scale analysis with support for million+ datapoints

State-of-the-art (SOTA) LLM evaluation methods and metrics

Support for issue diagnosis and remediation

On-prem/Private cloud deployment with real-time streaming support

Book A Call